NVMe server for vSAN virtual machines

Just a few years ago, an NVMe server would have been a premium to have either in the enterprise or at home. However, with the availability of NVMe drives and many different options for storage solutions now including NVMe drives, building your own NVMe server is no longer unrealistic. If you require high performance storage for any application and speed is crucial, NVMe is THE technology to deliver ultra performance. Now, with NVMe drives available from basically all vendors and in many price levels, even cost is no longer a deterrent. In my home lab environment, I have three VMware vSAN hosts featuring all NVMe SSDs for both cache and capacity. I wanted to detail my experience with using consumer drives in my home lab vSAN hosts to document what I have experienced from a reliability and longevity standpoint.

What are NVMe SSDs?

NVMe stands for nonvolatile memory express. It is a class of storage access and transport protocol for flash and next-generation SSDs. Currently, it delivers the highest throughput, lowest latency, and fastest response times for enterprise and other workloads.

Now with Intel killing off Optane, the 3D XPoint technology that was even faster than NVMe, with lower latency, it once again makes NVMe the top tier of storage available on the market. This also signals the end for Intel in the SSD business.

You may wonder what makes NVMe so fast. Well, unlike SATA and SCSI, NVMe SSDs don’t need a controller to communicate with the CPU, making communication with the CPU even faster. It also sports 64K queues along with the capability to send 64K commands per queue. It also makes use of 4 PCIe lanes

The next two sections for those that want the nitty gritty details come from the NVMe spec documentation here: NVMe Base Specification, Revision 1.4c (nvmexpress.org)

NVMe SSD specifications

The NVM Express scalable interface is designed to address the needs of Enterprise and Client systems that utilize PCI Express based solid state drives or fabric connected devices. The interface provides optimized command submission and completion paths. It includes support for parallel operation by supporting up to 65,535 I/O Queues with up to 64 Ki – 1 outstanding commands per I/O Queue. Additionally, support has been added for many Enterprise capabilities like end-to-end data protection (compatible with SCSI Protection Information, commonly known as T10 DIF, and SNIA DIX standards), enhanced error reporting, and virtualization. The interface has the following key attributes:

• Does not require uncacheable / MMIO register reads in the command submission or completion path;

• A maximum of one MMIO register write is necessary in the command submission path;

• Support for up to 65,535 I/O Queues, with each I/O Queue supporting up to 65,535 outstanding commands;

• Priority associated with each I/O Queue with well-defined arbitration mechanism;

• All information to complete a 4 KiB read request is included in the 64B command itself, ensuring efficient small I/O operation;

• Efficient and streamlined command set;

• Support for MSI/MSI-X and interrupt aggregation;

• Support for multiple namespaces;

• Efficient support for I/O virtualization architectures like SR-IOV;

• Robust error reporting and management capabilities; and

• Support for multi-path I/O and namespace sharing. This specification defines a streamlined set of registers whose functionality includes:

• Indication of controller capabilities;

• Status for controller failures (command status is processed via CQ directly);

• Admin Queue configuration (I/O Queue configuration processed via Admin commands); and • Doorbell registers for scalable number of Submission and Completion Queues.

NVMe vs NVMe over Fabrics

The NVM Express base specification revision 1.4 and prior revisions defined a register level interface for host software to communicate with a non-volatile memory subsystem over PCI Express (NVMeTM over PCIeTM). The NVMeTM over Fabrics specification defines a protocol interface and related extensions to the NVMe interface that enable operation over other interconnects (e.g., Ethernet, InfiniBand™, Fibre Channel). The NVMe over Fabrics specification has an NVMe Transport binding for each NVMe Transport (either within that specification or by reference).

In this specification, a requirement/feature may be documented as specific to NVMe over Fabrics implementations or to a particular NVMe Transport binding. In addition, support requirements for features and functionality may differ between NVMe over PCIe and NVMe over Fabrics implementations.

NVMe SSDs use cases

There are many use cases for using NVME drives. These include building an NVMe server, NVME, storage server, databases, NVMe server for virtual machines, aka, virtualization server, and many others. If you are like me, you can even build an all-flash NVME virtualization solution using VMware vSAN, using consumer-grade NVMe storage for hard drives in your virtualization hosts.

Back a few years ago, NVMe was a premium, even on the consumer side. I think I was buying 1 TB NVMe SSDs in the neighborhood of $450-500 or so. Now, as I will detail more below, you can buy Samsung 980 EVO Pro 2TB drives for under $250, which is amazing. The Samsung 980 Pros can do 7,000 MB sec read speeds. Can you imagine coupling NVMe storage with the latest Intel Xeon scalable processors or even older Intel Xeon procs or AMD EPYC? The performance is really incredible with these types of solutions, even for home lab environments.

Below is a Samsung 980 Pro PCIe 4.0 NVMe M.2 SSD

NVMe is a great use case for home labs. Often, you will find the bottleneck in a home lab environment is going to be your storage subsystem, your disks. So, generally speaking, in a home lab, you can go a bit lighter on the CPU, such as not springing for the latest Intel Xeon scalable processors and, instead, going for an older Intel Xeon processor. With NVMe storage, especially for home lab virtualization, this is generally where you will see the biggest boost in performance.

My NVME Server for virtual machines

I am still running on my (4) Supermicro SYS-5028D-TN4T servers and (1) Supermicro SYS-E301-9D-8CN8TP. Both models are running all flash NVMe drives with great performance. Note the following specs of the Supermicro SYS-5028D-TN4T:

028D-TN4T NVMe servers:

- No redundant power supplies

- 128 gigs RAM max 256 gigs RAM

- 2 10gig LAN ports for networking

- 2 1 gig LAN ports for networking

- Intel Xeon D processor

- SATA SSDs for boot

- Small form factor

- (4) hot swap drive bays which increases storage options

- As a note these are SOC (system on chip) so single proc, not swappable, not dual socket, etc

- These also have a single power supply

- 4 DIMM slots

- 3 NVMe SSD drives with storage capacity based on the size of NVMe drives configured in the server

The Supermicro SYS-E301-9D-8CN8TP is a different form factor, however, contains very similar specs:

- 2 10 gig LAN ports for networking

- 6 1 gig LAN ports for networking

- Intel Xeon D processor

- SATA SSDs can be used for boot

- Small form factor

- No hot swap drive bays

- Single power supply

- 4 DIMM slots

- 128 gigs RAM max 256 gigs RAM

- 3 NVMe SSD drives with storage capacity based on the size of NVMe drives configured in the server

Using consumer NVMe SSDs for vSAN storage – failures?

One of the worries many have with using consumer NVMe SSDs for the home lab is the potential for failures. Generally, this is what sets enterprise-grade NVMe SSDs apart from consumer-grade NVMe SSDs. The enterprise-grade NVMe drives are guaranteed for many more writes than consumer-grade gear.

However, I will say either I have had exceptionally good fortune with the consumer-grade NVMe SSDs I have used or just got a really good life out of them. The Samsung EVOs I used in the lab have worked well, all-in-all. I have used a wide range of different models of the EVO, including:

- Samsung 960 1TB (non-Pro)

- Samsung 970 Plus and non-Plus

- Samsung 980 Pro

The failures I have seen have mainly been the cache drives in VMware vSAN that have bit the dust after running them for over a year. As expected these will take the most abuse in the disk group. The ones I had to fail were the non-pro 960s which are quite long in the tooth at this point. However, the beauty of using VMware vSAN for your home lab NVMe server platform for running virtual machines is your data is protected using vSAN object storage.

NVMe server with VMware vSAN changing failed NVMe drives

If you are running VMware vSAN on top of your NVMe server, it is easy to rebuild your vSAN disk group after replacing the NVMe SSD. How si this done? You can do this by deleting the disk group for the host and recreating it after a failed NVMe SSD.

Step 1

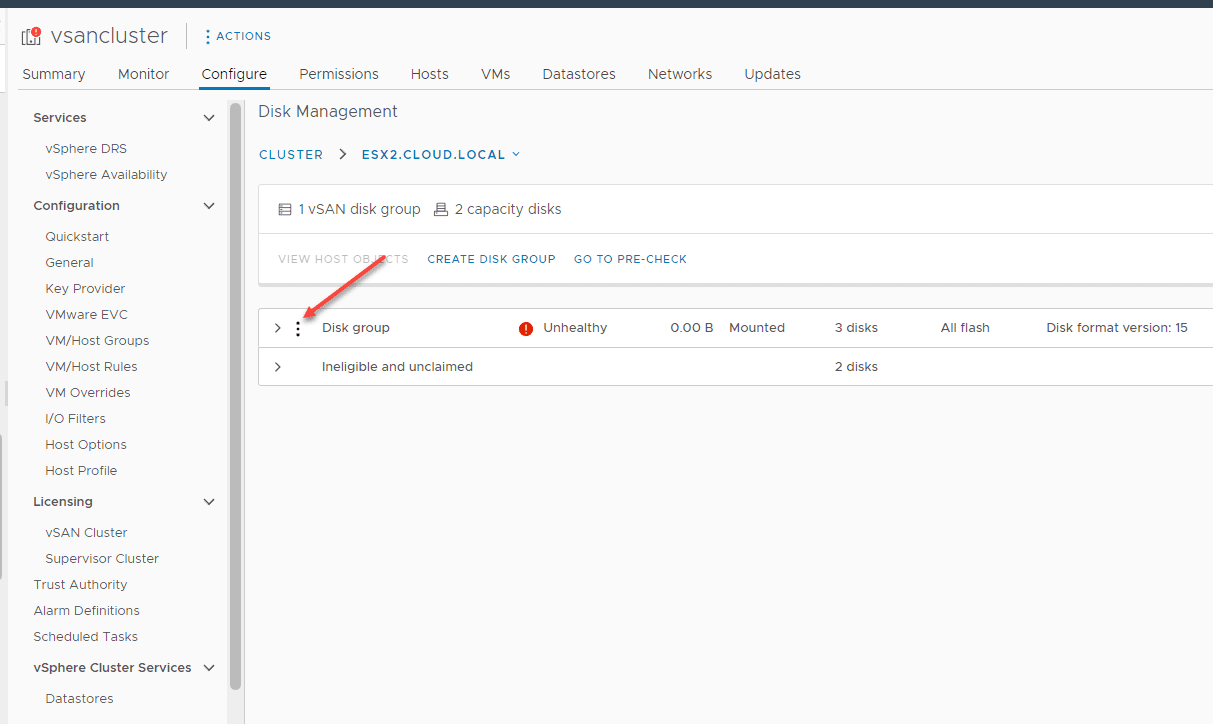

You don’t have to delete the disk group, as you can claim newly added drives and remove the ones that have failed. However, in the home lab environment, I have found that simply deleting the disk group is sometimes the cleaner approach to doing this.

Step 2

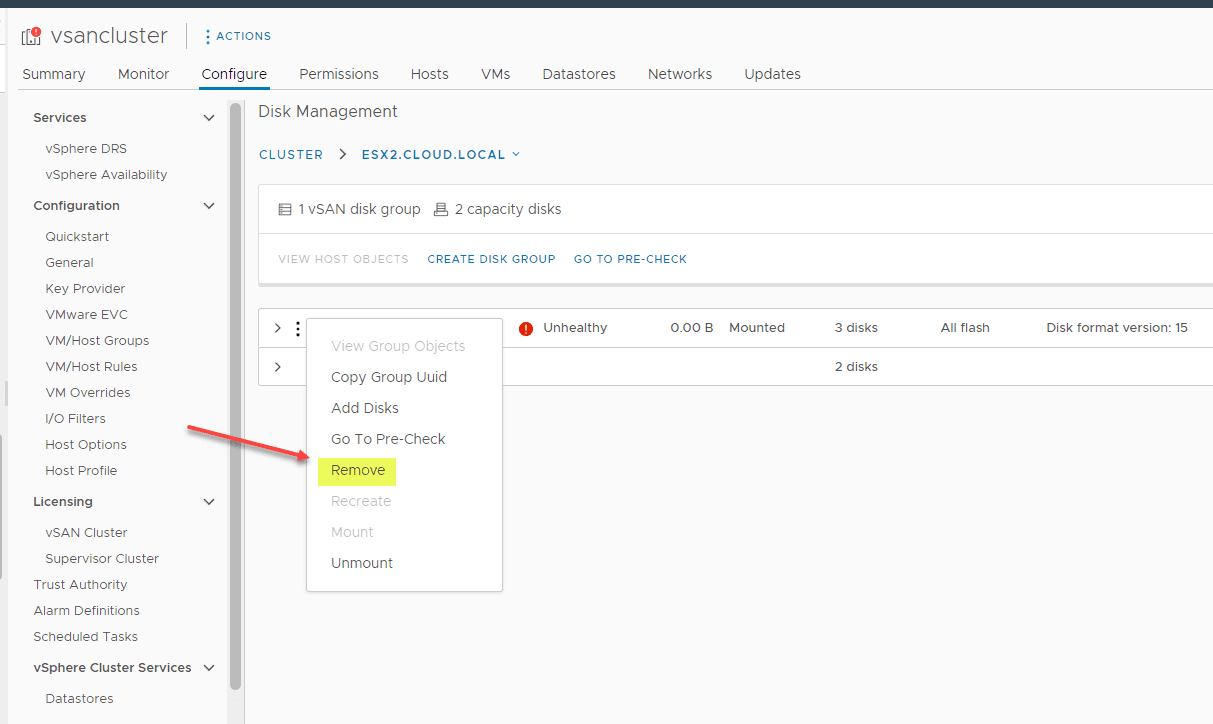

Clicking the ellipse you will see the Remove option.

Step 3

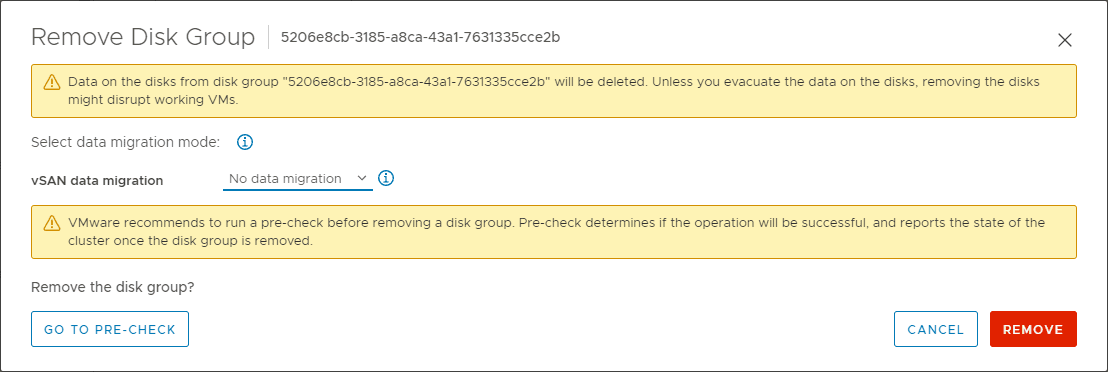

You will see the option to Remove the Disk Group. I have also selected the option for No data migration.

Step 4

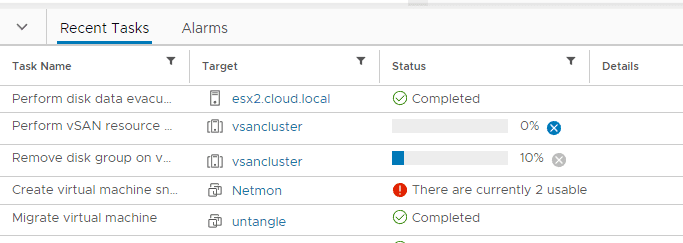

Once you click the Remove button, you will see the operation kick off in your vCenter tasks.

Now you can claim the newly available NVMe disks into your disk group and recreate the disk group.

NVMe server for vSAN virtual machines FAQs

What are NVMe drives? NVMe stands for nonvolatile memory express. It is a class of storage access and transport protocol for flash and next-generation SSDs. Currently, it delivers the highest throughput, lowest latency, and fastest response times for enterprise and other workloads.

Is it practical?

Are NVMe server hosts practical today for enterprise and home lab? With NVMe SSD prices coming down and no lack of availability, they are the preferred type of drive for high performance and any applications that need high speed storage access.

Are NVMe drives compatible with both Intel Xeon and AMD EPYC processors? Yes they work with either and are not exclusive to a type of CPU. As long as NVMe has access to PCI e slots, you can install them on the motherboard, either by an add-on card or M.2 slot if there is one built into your board.

Wrapping Up

Hopefully, this overview of my NVMe server configuration for vSAN virtual machines will give an idea of how my home lab is configured and why you should be considering using NVMe SSDs in your virtualization hosts. The cost of NVMe is no longer prohibitive and you reap many advantages in speed, high performance, and storage server benefits including low latency, bandwidth, and others.

0 Comments